Data sources and processing

At a technical level, Open Syllabus consists of essentially three components:

A web crawling architecture for collecting syllabi. We use a combination of general-purpose crawlers that scan for syllabi under a large set of seeds and a set of custom scrapers that target specific sites. We also mine syllabi out of Common Crawl and the Internet Archive.

A document parser that extracts structured metadata from the raw documents – course code, title, year, semester, field, institution, course description, book and article assignments, learning objectives, and more.

A batch processing pipeline that does ETL on the source datasets and runs model inference.

Pipeline

Syllabus classification

Syllabi are quite distinctive at a lexical level, and can be identified with simple bag-of-words classifiers. We use a simple XGBoost model over ngram features, trained on ~100k labeled documents, which has performance of F1=0.95 on test data.

It’s worth nothing that there’s some ambiguity about what does and doesn’t constitute a syllabus. For example, university websites often contain short “stub” pages about courses, that sit in a middle ground between a full-blown syllabus and a course catalog entry – they generally include a course code, title, date, and sometimes a description, but not the rich description of the course you’d get on a long-format syllabus document. When labeling training data, we currently define syllabi fairly loosely as a “document that describes a specific instance in which a course is taught.” Depending on your needs, though, this might mean that the number of documents that meet your requirements is somewhat lower than the overall count – for example, if you were only interested in syllabi with detailed week-by-week assignment sequences, or with long-format course description content.

In the future, we may train more granular models that can separate these different types of syllabi and syllabus-like documents – long-format syllabi, short syllabi, course catalog entries, course profile pages on department websites, etc.

Span extraction

Most syllabi are distributed as raw PDF / DOCX / HTML files. Some institutions use course management systems that represent syllabi in structured ways internally, but when scraped from the web, we just see the rendered HTML. So in practice, when working with syllabi at scale across hundreds of institutions, we’re effectively dealing with a big heap of unstructured text blobs.

To convert these into a format that can easily analyzed, Open Syllabus develops an end-to-end document parser for syllabi that converts the documents into structured metadata. The backbone of this is a span extraction architecture (“Syllaparse”) that performs an initial segmentation of the document. The raw text is mapped into a taxonomy of 22 entity types:

title– The title of the course.code– The catalog code for the course, generally a department prefix and number (CS 101).section– The section identifier for the course (eg, the03inCS 101-03)date– When the course was taught. Usually a semester + year –Spring 2021.class_days– Days the class meets.class_time– Time of day the class meets.class_location– Location of the class meetings, generally a building name and room number.instructor– The name of the instructor(s).instructor_phone– The instructor’s phone number.office_location– Location of the office hours, generally a building name and office number.office_hours_days– The days when office hours are held.office_hours_times– The times when office hours are held.credits– The number and/or type of credits that the course is worth.description– A free-text description of the course, usually roughly a paragraph in length.learning_outcomes– A list of things that students are expected to learn in the course.citations– Book and article citation strings.required_reading– A citation for a single “required” reading for the course.grading_rubric– How grades are assigned; often a mapping numeric grades to letter grades.assessment_strategy– A free-text description of how students are evaluated.topic_outline– A list of topics covered in the course.assignment_schedule– Chronologically-ordered lists of readings, topics, or assignments.school_name– The name of the institution where the course was taught.

See the schema documentation for more details.

Citation parsing + linking

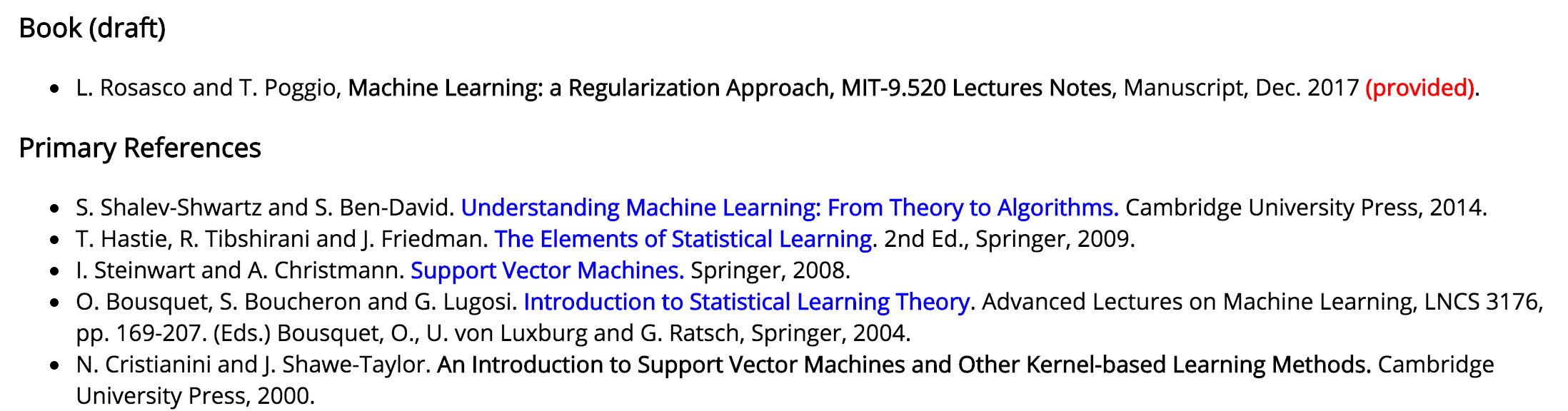

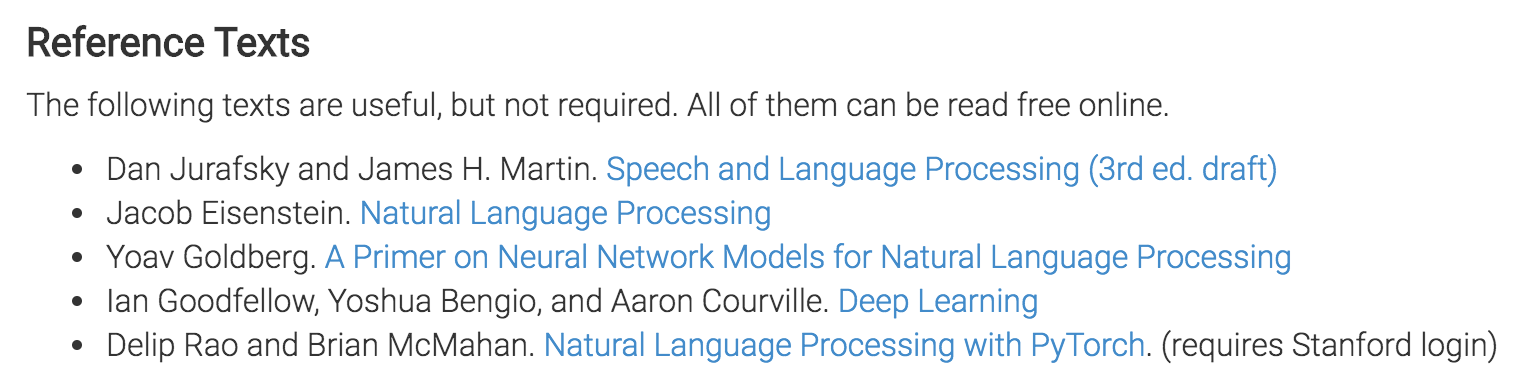

Syllabi are full of references to different kinds of resources – mainly books and articles, but also websites, YouTube videos, Wikipedia articles, movies, paintings, musical scores, and more. Sometimes these are represented as well-structured citations, like you’d find in research papers:

But, it’s also common to find more abbreviated styles in syllabi, often with just the title of the work and the author name:

Or sometimes with a specific edition or translation:

This variety in citation formats makes it difficult to use off-the-shelf citation extractors, which will often miss the more abbreviated formats. To get these out, we use two stacked span extraction models. First, the citation field from the top-level document parsing model provides a list of raw citation strings from the syllabus. Eg, from the examples above, we’d end up with spans like:

S. Shalev-Shwartz and S. Ben-David. Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press, 2014.Dan Jurafsky and James H. Martin. Speech and Language Processing (3rd ed. draft)Invisible Man. Ralph Ellison. (Vintage)

We then pass these isolated citation strings into a second span extraction model that segments the citations into component parts - title, subtitle, author, publisher, organization, etc., which is trained on 25k hand-labeled citations extracted specifically from syllabi, to ensure that we’re handling the full range of citation styles that appear in the corpus.

Once we have isolated title and author spans from the citations, we can link these against a database of known bibliographic records to get standardized metadata for each citation. For example, for a citation like Invisible Man. Ralph Ellison., this would give us a list of ISBNs for the book, the year it was published, etc.

We currently link against a custom knowledge base of ~100M books and articles, formed from a combination of major sources for bibliographic data, including:

Near-duplicate clustering

Open Syllabus collects syllabi from institutional repositories, course catalogs, faculty homepages, and departmental websites, and we regularly re-crawl the same sites to pick up new documents as they come online. This means that we often pick up multiple copies of the same documents. It’s easy to drop out exact duplicates with checksum comparisons, but, as with any web crawling effort, there are lots of ways that documents can change change in subtle ways over time, even if the content hasn’t change in any meaningful way – site redesigns can the text in menus to change; date widgets can print out unique timestamps every time the page is requested; an instructor might fix a typo in a course description; and so on.

To remove these kinds of near-duplicates from the corpus, we use a “fuzzy” dedupe step that clusters together documents with text content that is similar but not identical. For each of the major “full-text” fields extracted by the top-level span extraction model, we use a minhash LSH index (via the great datasketch library) to cluster together documents with near-duplicate values (where Jaccard similarity >0.9). Then, these “bucketed” full-text hashes are combined with a set of exact values from structured metadata fields to form a set of final deduplication keys, which effectively serve as a kind of composite primary key for the document. In 2.8 we use:

Institution ID

Year

Semester

Course code

Section number

The books and articles assigned in the course, represented as a set of canonical work IDs

Full-text LSH buckets for:

descriptionlearning_outcomecitationassignment_schedulegrading_rubricassessment_strategytopic_outline

The goal is to differentiate documents with any kind of “substantive” change where it might be valuable to retain multiple copies; but to discard duplicates with small or insignificant changes. The question of where exactly an insignificant change shades into a substantive change is somewhat fuzzy, though, and can be different depending on how the data is being used. Our approach to this may continue to evolve in the future.

In 2.8, the deduplication step removes ~50% of an initial set of 30M documents that pass the syllabus classifier, leaving ~15M deduplicated syllabi in the final corpus.

Field classification

The organization of fields and departments varies significantly across different institutions. To abstract over some of these differences and make it easy to facet syllabi by field across different schools, Open Syllabus classifies syllabi into a curated set of field labels based on the US Department of Education CIP codes (2010 version), but rolled up in places to avoid granular distinctions that aren’t consistently reflected in the department structure at many institutions.

Like with the syllabus classification, simple bag-of-words ngram models do fairly well with this. The current model has F1=0.84 across all 69 fields.

Academic Field |

Precision |

Recall |

F1 score |

|---|---|---|---|

Accounting |

0.96 |

0.90 |

0.93 |

Agriculture |

0.83 |

0.67 |

0.74 |

Anthropology |

0.93 |

0.86 |

0.89 |

Architecture |

0.81 |

0.75 |

0.78 |

Astronomy |

0.82 |

0.60 |

0.69 |

Atmospheric Sciences |

0.88 |

1.00 |

0.94 |

Basic Computer Skills |

0.75 |

0.76 |

0.76 |

Basic Skills |

0.77 |

0.62 |

0.69 |

Biology |

0.85 |

0.92 |

0.88 |

Business |

0.77 |

0.85 |

0.80 |

Career Skills |

0.47 |

0.32 |

0.38 |

Chemistry |

0.94 |

0.89 |

0.92 |

Chinese |

0.92 |

0.97 |

0.94 |

Classics |

0.67 |

0.67 |

0.67 |

Computer Science |

0.74 |

0.82 |

0.78 |

Construction |

0.69 |

0.53 |

0.60 |

Cosmetology |

0.98 |

0.98 |

0.98 |

Criminal Justice |

0.74 |

0.71 |

0.73 |

Criminology |

0.60 |

0.50 |

0.55 |

Culinary Arts |

0.81 |

0.97 |

0.88 |

Dance |

0.90 |

0.98 |

0.94 |

Dentistry |

1.00 |

0.97 |

0.99 |

Earth Sciences |

0.91 |

0.80 |

0.85 |

Economics |

0.93 |

0.92 |

0.92 |

Education |

0.87 |

0.87 |

0.87 |

Engineering |

0.66 |

0.70 |

0.68 |

Engineering Technician |

0.58 |

0.57 |

0.57 |

English Literature |

0.87 |

0.91 |

0.89 |

Film and Photography |

0.82 |

0.72 |

0.77 |

Fine Arts |

0.84 |

0.86 |

0.85 |

Fitness and Leisure |

0.83 |

0.75 |

0.79 |

French |

0.97 |

0.98 |

0.97 |

Geography |

0.87 |

0.89 |

0.88 |

German |

0.90 |

0.95 |

0.93 |

Health Technician |

0.71 |

0.70 |

0.71 |

Hebrew |

0.87 |

0.93 |

0.90 |

History |

0.90 |

0.91 |

0.90 |

Japanese |

0.95 |

1.00 |

0.98 |

Journalism |

0.93 |

0.99 |

0.96 |

Law |

0.82 |

0.82 |

0.82 |

Liberal Arts |

0.78 |

0.65 |

0.71 |

Library Science |

0.93 |

0.90 |

0.92 |

Linguistics |

0.97 |

0.87 |

0.92 |

Marketing |

0.82 |

0.82 |

0.82 |

Mathematics |

0.92 |

0.97 |

0.95 |

Mechanic / Repair Tech |

0.81 |

0.69 |

0.74 |

Media / Communications |

0.83 |

0.77 |

0.80 |

Medicine |

0.74 |

0.74 |

0.74 |

Military Science |

0.91 |

0.91 |

0.91 |

Music |

0.78 |

0.89 |

0.83 |

Natural Resource Management |

0.70 |

0.57 |

0.63 |

Nursing |

0.93 |

0.86 |

0.89 |

Nutrition |

0.88 |

0.81 |

0.85 |

Philosophy |

0.83 |

0.83 |

0.83 |

Physics |

0.83 |

0.89 |

0.86 |

Political Science |

0.87 |

0.93 |

0.90 |

Psychology |

0.86 |

0.92 |

0.89 |

Public Administration |

0.83 |

0.33 |

0.48 |

Public Safety |

0.71 |

0.76 |

0.74 |

Religion |

0.85 |

0.80 |

0.82 |

Sign Language |

0.98 |

1.00 |

0.99 |

Social Work |

0.90 |

0.86 |

0.88 |

Sociology |

0.92 |

0.74 |

0.82 |

Spanish |

0.94 |

0.94 |

0.94 |

Theatre Arts |

0.86 |

0.81 |

0.84 |

Theology |

0.94 |

0.81 |

0.87 |

Transportation |

0.69 |

0.53 |

0.60 |

Veterinary Medicine |

0.72 |

0.81 |

0.76 |

Women’s Studies |

0.80 |

0.91 |

0.85 |

Precision |

Recall |

F1 |

|

|---|---|---|---|

Accuracy |

0.84 |

||

Macro avg |

0.83 |

0.81 |

0.82 |

Weighted avg |

0.84 |

0.84 |

0.84 |